Extending Code Llama

I’ve been diggin' Code Llama lately. It's free, easy to set up, and using Code Llama via Ollama can be a lot of fun. Code Llama has already proved itself useful out-of-the-box; nevertheless, I was curious if I could boost my productivity by using retrieval-augmented generation (or RAG for short). I decided on pointing Code Llama directly at specific code for question answering. I wanted to see if Code Llama could help me understand an underlying code base and help me develop new features.

If you're willing to roll up your sleeves, this is a fairly easy process! All you have to do is write a little Python code.

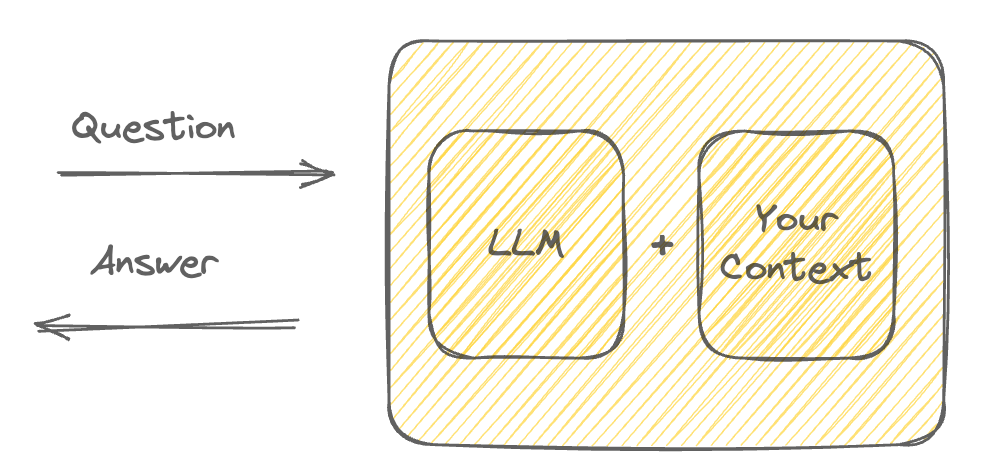

What's RAG?

Briefly, RAG is technique that combines something specific (like code, documents, images, etc) with a more general LLM. In this way, as Kim Martineau explains, RAG improves the:

quality of LLM-generated responses by grounding the model on external sources of knowledge to supplement the LLM’s internal representation of information.

For instance, if I asked an LLM, such as OpenAI's ChatGPT, to summarize everything I've recently written about leadership, I'd be out of luck. ChatGPT has no knowledge of my recent blog entries as the model was trained before I wrote my articles. Nevertheless, I could provide the model with some relevant information, such as everything amazing I've recently written and then ask the LLM to summarize my leadership articles. Depending on the quality and quantity of the specific information provided, the LLM could surprise you!

In essence, via RAG, you provide the LLM with some specific information, and then the corresponding answers to your questions are more informed through the use of that specific data.

Code Llama and RAG

Leveraging RAG with Code Llama takes about 60 lines of Python code. What's more, I leveraged Ollama along with LangChain. You'll need to ensure that you've both pulled and run the Code Llava LLM via Ollama beforehand. The steps to create a RAG with Code Llama are:

- Create an instance of an LLM.

- Create a prompt (i.e. context for the LLM).

- Load a directory full of code.

- Split the various files from step 3 into chunks.

- Store those chunks in a vector database.

- Create a chain from all the above which generates answers to questions.

I created a simple class named ChatCode that has three methods: an initialization one (i.e. Python'sinit), ingest, and an ask. The init method creates an instance of ChatOllama using the Code Llama LLM and creates a prompt context.

def __init__(self):

self.model = ChatOllama(model="codellama")

self.prompt = PromptTemplate.from_template(

"""

<s> [INST] You are an expert programmer for

question-answering tasks. Use the following pieces of retrieved

context to answer the question. If you don't know the answer,

just say that you don't know. Use three sentences maximum and

keep the answer concise. [/INST] </s>

[INST] Question: {question}

Context: {context}

Answer: [/INST]

"""

)ChatCode's initialize method.

The key aspect of init is the creation of a PromptTemplate, which seeds the LLM with specific context (i.e the LLM should act as an expert programmer). The context provided connects the interaction between the LLM and a user. As Harpreet Sahota eloquently states, LangChain's PromptTemplate class enables

the refined language through which we converse with sophisticated AI systems, ensuring precision, clarity, and adaptability in every interaction.

The magic of RAG happens in the ingest method where a number of important operations occur. Firstly, a file system path is provided and all Ruby files are loaded into memory via the GenericLoader class. Those Ruby files are then spit into chunks for efficient indexing into a vector store. The logic here is obviously specific to Ruby and you can easily do the same for Python, Go, Java, or a handful of other languages.

def ingest(self, path: str):

loader = GenericLoader.from_filesystem(

path, glob="**/[!.]*", suffixes=[".rb"],

parser=LanguageParser(),

)

text_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.RUBY, chunk_size=1024, chunk_overlap=100)

chunks = text_splitter.split_documents(loader.load())

chunks = filter_complex_metadata(chunks)

vector_store = Chroma.from_documents(

documents=chunks, embedding=FastEmbedEmbeddings())

retriever = vector_store.as_retriever(

search_type="similarity_score_threshold",

search_kwargs={"k": 3,"score_threshold": 0.5}

)

self.chain = ({"context": retriever,

"question": RunnablePassthrough()}

| self.prompt

| self.model

| StrOutputParser())ChatCode's ingest method.

The vector store in this case is Chroma, which by default stores vectors (i.e. documents) in memory. A LangChain Retriever instance is created that returns results from Chroma given a specific query. In this case, a similarity score threshold retrieval is used, which returns a maximum of three documents above a threshold of 0.5. Finally, a LangChain chain is created that links everything together. This final step is where RAG's power is leveraged as an existing model, like Code Llama, is combined with specific context, such as Ruby code.

With RAG set up, asking a question of a code base couldn't be any easier! The ask method simply invokes the aforementioned chain like so:

def ask(self, query: str):

if not self.chain:

return "Please, add a directory first."

return self.chain.invoke(query)CodeChat's ask method.

As you can see, there's not a lot of complex code. It's quite simple! Specific context is loaded into memory and combined with an LLM for a more precise, context-aware answer. In this case, asked questions will be answered by what's found in the underlying indexed code.

Show me the money, baby!

As I have a few different Rails application on hand, I fired up the Python interpreter on my local machine and ingested a simple dictionary application named Locution.

>>> from chat_code import ChatCode

>>> code = ChatCode()

>>> code.ingest("/Users/ajglover/Development/projects/github/locution")The ingest method might take a few moments depending on how many files are loaded. Once ingest returns, you can then invoke the ask method. I decided to start simple and give CodeChat a softball question:

>>> code.ask("what language was this code base written in?")Starting with an easy question!

While the question is an easy one, it also demonstrates that CodeChat is focused on my Locution code base!

'The code base was written in Ruby.'A softball question yields the correct response!

With CodeChat providing a specific answer, I'm confident that my RAG setup with Code Llama is working correctly. Next, I'll ask a more relevant question for what would be a typical use case for a question-answer style application pointed at a code base. In my case, I want to know about the underlying database.

>>> code.ask("what database tables does this application work with?")

I could have also looked at the models.

CodeChat dutifully tells me that two tables are defined (did I mention this is a simple Rails application?).

'The application works with two database tables, "definitions" and "words". The "definitions" table has columns for the definition and example sentence, as well as a foreign key referencing the "words" table. The "words" table has columns for the word and part of speech, as well as timestamps for creation and update.'A detailed response describing the two tables defined in the application.

The generated response is quite helpful as it describes the details of the two tables words and definitions. But I'm not quite satisfied as I'd like to see the DDL. It's no problem though – all I have to do is ask!

>>> code.ask("can you show me the DDL for the two tables defined?")Gettin' specific with CodeChat!

CodeChat gives me what appears as a cogent answer:

Sure, here are the DDL statements for the two tables defined in the provided context:

1. `definitions` table:

CREATE TABLE definitions (

definition VARCHAR(255),

example_sentence VARCHAR(255),

word_id BIGINT NOT NULL,

created_at DATETIME NOT NULL,

updated_at DATETIME NOT NULL,

PRIMARY KEY (word_id));

2. `words` table:

CREATE TABLE words (

word VARCHAR(255),

part_of_speech VARCHAR(255),

created_at DATETIME NOT NULL,

updated_at DATETIME NOT NULL,

PRIMARY KEY (word));

Note that the `definitions` table has a foreign key relationship with the `words` table on the `word_id` column.Is this the DDL for the underlying database?

I was immediately suspicious of a few odd aspects of the answer, however. While there are indeed two tables, word_id isn't the primary key of the definitions table nor is word the primary key of the words table. Skepticism towards what an LLM will confidently tell you is a key skill! The good news is that with code, you can usually spot any issues quickly.

Perhaps asking low level database questions isn't necessarily the right level of abstraction required at this point. Afterall, it's a Rails application, so let me see if I can figure out how to use application code to find a specific word:

>>> code.ask("is there an API call to find a specific word in the application?")Can I find a specific word?

My RAG-ified Code Llama application produced a detailed answer to the above question, containing some great examples and explanations of the corresponding code.

There is no specific API call to find a specific word in

the application, but there are several ways to retrieve

words based on their attributes or associations.

For example, you can use the `Word.find_by` method to find a

word by its ID, or use the `Word.where` method to find words

that match certain criteria.

You can also use the `include` method to eager load associations,

such as definitions, for a specific word. For example:

@word = Word.find_by(id: 1).includes(:definitions)

This will retrieve the word with an ID of 1 and include its

associated definitions in the response.

If you need to find a specific word based on multiple

attributes, such as the word itself and the part of speech,

you can use the `where` method to create a more complex query.

For example:

@word = Word.where(word: 'hello', part_of_speech: 'noun').first

This will retrieve the first word that matches the word "hello" and

the part of speech "noun".

You can also use the `order` method to sort the results based

on a specific attribute, such as the ID.

For example:

@words = Word.order(:id).page params[:page]

This will retrieve the words in ascending order by their IDs

and paginate the results based on the value of

the `params[:page]` parameter.

Overall, there are several ways to find specific words in an

application based on various attributes or associations. The best

approach will depend on your specific use case and requirements.A detailed response for how to use the Word model.

This type of detailed response with code examples tailored to my specific code base is incredibly helpful. It's akin to reading a tutorial specific to an application. I've easily spent a few hours asking ChatCode various questions about my simple Rails application and I'd say that 80% of the generated answers are helpful. I suspect that I can extract better answers by providing better questions!

I found that asking questions like "how do I create a controller method that removes a word" and "how do I add a new attribute to the word model" yielded fairly competent responses with detailed elaborate steps. In this way, I can see how an AI coding assistant could be helpful when becoming acclimated with a new code base. Rather than spending a lot of context switching time between my IDE and Googling for answers, this workflow is intuitively more productive.

The legacy of code as a use case

Throughout much of my career, I've had to work on ancient code. This sort of code is often labeled as legacy code. It's frequently mystifying and definitely frustrating when getting started. The above use case of leveraging a RAG that combines a specific code base with an LLM, like Code Llama, could certainly make the experience of familiarizing yourself with legacy code way more easy. What's more, what I've demonstrated here is a command line interface. This experience is less optimal than a snazzy UI or even an integrated IDE experience (like Github's Copilot). Both UI and IDE integration options are entirely possible nevertheless!

AI enhanced productivity is here

Throughout my career, I've often heard complaints about new technologies. Java was too slow in 1997. Mobile devices weren't powerful enough to do anything interesting in the early 2000s. And AI isn't too sophisticated – it hallucinates, frequently making mistakes (as I've shown above). But the history of technology has clearly shown that useful technologies improve. Today's LLMs are the worst they'll be. LLMs can only get better as we learn how to both leverage them and importantly, improve them.

The skeptics of today are right to point out deficiencies! But remember that the deficiencies of today will be solved tomorrow provided the underlying technology is promising. Don't overlook AI because of its shortcomings. It's here to stay and will only become more pervasive and pragmatic!

Want to learn more?

I created a Github repository, aptly named windbag, which has the above code. Moreover, Duy Huynh's article on Hackernoon was extremely helpful in demonstrating the various aspects of RAG. It certainly helped me get started. Furthermore, while I used Code Llama, you can just as easily use OpenAI's ChatGPT LLM using ChainLang's API. It would be interesting to compare and contrast the two!

Can you dig it?